-

No-Code

Platform

-

Studio

No-code agentic platform delivering the fastest time-to-value and the highest ROI

-

Studio

-

AI-Native CRM

CRM

-

AI-Native CRM

New era CRM to manage customer & operational workflows

CRM Products -

AI-Native CRM

- Industries

- Customers

- Partners

- About

AI Agents with the Human-in-the-Loop Approach - The Answer to More Reliable and Safer AI

Updated on

August 27, 2025

9 min read

Reduce Manual Effort and Streamline Daily Tasks

Having AI agents working in the background for your business while you focus on creative and strategic work sounds like a dream come true. But can we really trust AI to make good decisions? Air Canada learnt the hard way that leaving AI to roam completely free might not be the best idea, as their chatbot provided false information regarding the ticket refund policy, and they had to settle the dispute in court.

So, is it safe to let AI agents automate parts of our work, or is that too risky? The key is understanding that AI agents aren’t meant to replace humans; they’re designed to work alongside them, boosting productivity and supporting better decisions. To keep them on track, businesses should adopt a human-in-the-loop approach, where employees can monitor the AI’s work and step in whenever needed.

In this article, we will explain what a human-in-the-loop approach is and how to use it to make your AI agents more reliable and trustworthy.

Key takeaways:

- Human-in-the-loop (HITL) AI agents combine automation with human oversight to ensure that decisions are accurate, ethical, and aligned with business goals.

- HITL AI agents enhance reliability, safety, and accuracy while ensuring clear accountability in business workflows.

- HITL agents request human input for ambiguous, complex, or high-stakes decisions, where human context adds critical value.

- By learning from human feedback, these AI agents continuously improve, becoming more precise over time.

- In enterprise environments, HITL is crucial for building AI agents that are both high-performing and trustworthy.

What are AI Agents with Human-in-the-Loop?

AI agents with a human-in-the-loop (HITL) approach are software systems that integrate human oversight, decision-making, or intervention at key stages of the workflow. This design blends the speed and scalability of AI with the judgment and emotional intelligence that humans provide.

Rather than running in a fully automated, “hands-off” mode, HITL agents collaborate with people - learning from their feedback, asking for guidance, and deferring critical or ambiguous decisions to a human expert.

Human-in-the-loop AI agents can execute many steps independently - collecting data, analyzing inputs, generating recommendations, or even taking routine actions - but they are built to pause and escalate when encountering uncertainty, ethical considerations, or high-impact decisions.

It’s important to note that embedded human oversight is a feature, not a flaw - instead of treating human intervention as a failure of automation, HITL treats it as a strategic safety net. Humans provide contextual understanding that the AI might lack, verify correctness, and ensure alignment with organizational values and quality standards.

The State of AI Agents & No-Code

Learn how 560+ leaders across the world use AI and no-code to drive innovation today

Why is HITL Important for AI Agents?

The key advantages of implementing HITL oversight into AI agents’ workflows include:

- Higher accuracy and reliability: AI can make fast but brittle decisions in unpredictable environments. HITL AI agents mitigate the risks of errors, bias, or overconfidence. By providing feedback and assistance at crucial points, human reviewers help refine the AI model and increase the accuracy of their input.

- Efficient regulatory compliance: Many regulations require human accountability in fields like finance, healthcare, or law. HITL AI agents fulfill these requirements by implementing human oversight while still leveraging AI efficiency.

- Strengthen user trust: By allowing humans to review or override AI actions, organizations build trust and encourage adoption among users who may be wary of full automation.

- Strategic oversight over operational control: AI agents can take over much of the operational burdens, automating repetitive tasks, and asking humans to step in only in ambiguous or high-stakes cases. This allows businesses to increase operational efficiency while keeping humans involved where it matters most.

At Creatio, we believe the future of automation lies in humans and AI working side by side. Our approach ensures that every AI decision is guided by human oversight, backed by governance, and uncompromising data security

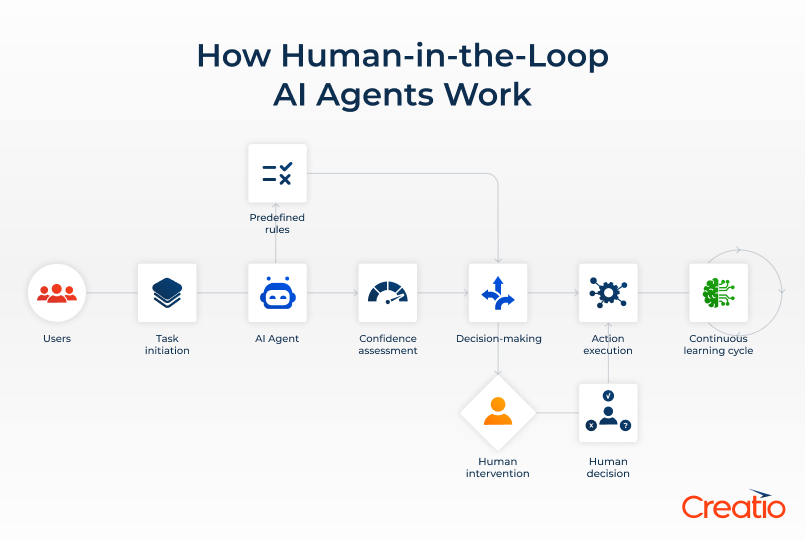

How Human-in-the-Loop AI Agents Work

Human-in-the-loop (HITL) AI agents are not just AI plus a person. They are deliberately engineered systems in which human involvement is a part of the workflows from the start, not bolted on as an afterthought.

Here’s how human-in-the-loop for AI agents works:

1. Task initiation

An AI agent receives input from a human to perform a task or is triggered by a specific event, such as a customer query or an incoming document. It processes the data, applies rules or models, and generates a proposed plan of action or recommendation.

Next, the autonomous AI agent executes the necessary workflow, fulfilling the sequence of tasks. While doing so, it may encounter a complex situation that it can’t resolve, hence it requests human intervention.

2. Predefined rules or confidence assessment

Not every action requires human attention. To determine when human oversight is needed, you can apply predefined rules or implement a confidence assessment.

When building a workflow or implementing an AI agent into an existing one, you can add an additional step, which requires human approval at a certain point. Sounds complicated? Doesn’t have to be! When using AI-native no-code platforms, such as Creatio, this step is a breeze. All you have to do is add a reusable component into the workflow that requires an AI agent to stop and ask for human intervention - no coding required.

Rather than relying on predefined rules for when to involve human oversight, the AI agent can determine this autonomously by evaluating its own confidence level - essentially, assessing how certain it is that it can complete the task accurately without human intervention.

3. Decision-making

The next step requires the AI agent to make a decision based on its calculations. If the confidence is high, the AI agent will proceed without human intervention. However, for low-confidence and high-impact scenarios, the AI agent pauses and consults a human.

For the workflows with predefined rules, the AI agent will always pause and ask for assistance whenever it encounters a HITL requirement.

4. Human intervention (when needed)

If an AI agent requires additional input to proceed with the task, it will present the scenario to the human reviewer, highlight uncertainties or anomalies, and provide some options.

We can highlight 3 different approaches to including human input:

- Inline HITL: Humans review every AI output before action. This is common in high-liability environments, such as the legal and medical industries.

- Selective HITL: Humans only review predefined cases or those below a confidence threshold. This approach is ideal for balancing efficiency and safety.

- Batch review HITL: AI acts in real time, but human reviewers audit a sample periodically to spot systemic issues. This approach is often used in content moderation.

5. Human decision

The human reviewer can approve, modify, or reject the AI agent’s suggestion based on the provided information. They can also take over to make an important decision or continue the conversation with a client to ensure their sensitive case is resolved with care.

6. Action execution

The approved decision, whether an AI suggestion or a human-adjusted one, is executed, and the AI agent can perform the rest of the tasks to achieve its goal.

7. Continuous learning cycle

Decisions made by humans are stored in the AI agent’s internal memory to improve the AI model over time. The more feedback the AI agent receives, the more opportunities it has to learn, and the less assistance it will need in the future. Therefore, investing the time in guiding the AI agents will pay off as they will become smarter and more reliable.

Real-World Examples of Human and AI Agent Partnership

The real question is when to implement a human-in-the-loop approach and when you can let AI agents do their thing without worrying about consequences. The key is to find the right balance between oversight and automation to ensure you benefit from productivity gains while staying safe.

Below, you’ll find a few real-world examples that clearly demonstrate when and how to use HITL:

Use Case | AI Agent | Human | Result |

| Answering customer inquiries | An AI Chatbot answers typical questions using internal knowledge bases. When encountering a nuanced question, emotional situation, or high-value case, it escalates the conversation to the human representative with a summary of previous interactions. | A customer service representative takes over the conversation and answers customer questions using their expertise and experience. | The customer receives answers to routine questions faster while still being able to contact a human with more complicated or sensitive inquiries. |

| Healthcare diagnostics | An AI agent analyzes X-rays or MRI scans, highlighting areas of concern that might be difficult for humans to spot. | A radiologist analyzes the medical image and reviews AI suggestions to make the final call | Faster and more reliable medical diagnosis that ensures a medical professional with expertise remains in control |

| Financial fraud detection | An AI agent monitors transactions and flags unusual behavior in real-time. It alerts financial analysts about potential fraud. | A human analyst decides whether to freeze accounts and alert customers or deem the AI agent’s suggestion as irrelevant. | More reliable fraud detection thanks to AI speed and human expertise. |

| Code co-development | AI agents generate code snippets, find bugs, and suggest improvements | The developer reviews AI suggestions and makes a decision to either approve them, decline, or adjust. | Faster development and fewer errors. |

| Content moderation on social media platforms | AI filters obvious violations (spam, nudity, etc.) but escalates borderline cases, like political satire, to human moderators. | Human moderators review cases suggested by AI agents and make decisions whether to allow or block a specific piece of content. | Autonomous AI agents provide continuous moderation in real time but rely on human expertise in ambiguous cases to ensure effective content moderation. |

| Legal document creation | AI extracts key clauses from contracts and fills out documents. | Lawyers review documents for correctness, legal nuance, or risk exposure. | AI agents streamline the preparation of legal documents, allowing lawyers to focus on the cases rather than the paperwork. |

| HR recruitment | An AI agent screens resumes, looking for the best candidate based on the ideal candidate profile, and presents a list of top candidates to recruiters | A recruiter analyzes the list and chooses the most suitable candidates to invite to the process. | Recruiters can focus on interviews rather than sorting through thousands of resumes. The HR department can streamline the recruitment process while ensuring high standards and a human approach. |

Powering Human–AI Collaboration with Creatio’s HITL Agents

Creatio, well-known for its agentic CRM and workflow platform with no-code and AI at its core, offers AI agents that embody human-in-the-loop principles. Creatio’s AI agents are designed to work alongside people, blending machine intelligence with human judgment to unleash the full potential of human and digital talent working in harmony. Rather than seeking to fully automate decision-making, Creatio lets you stay in control of every step of your AI-powered workflows.

In Creatio’s platform, AI agents are deeply embedded into everyday workflows across sales, marketing, customer service, and revenue operations. They automate actions, deliver contextual insights, and recommend the next steps in real time, allowing humans to focus on creative and strategic tasks.

Creatio’s AI agents go beyond making recommendations; they clearly explain the reasoning behind them, outlining decision pathways, data sources, and underlying logic for full transparency. This empowers human users to understand the basis of each suggestion and apply their own expertise before acting. By combining clarity, oversight, and continuous improvement, Creatio ensures its AI delivers responsible, trustworthy outcomes.

This approach has particular value for Creatio’s customer base, many of whom operate in complex B2B environments with long sales cycles and high-stakes decisions. In industries like finance and healthcare, where compliance requirements are strict, the HITL model also provides a safeguard against regulatory risk. At the same time, by making the AI’s reasoning transparent, Creatio helps build trust and encourages adoption among teams that might otherwise be wary of automation.

The Bottom Line: Human-in-the-Loop for AI Agents is the Key to Reliable AI

AI agents with a human-in-the-loop approach offer businesses the best of both worlds - combining the speed and scalability of automation with the context and emotional intelligence only humans can provide.

In complex business environments, where decisions often have regulatory, financial, or reputational consequences, HITL is not just a safeguard - it’s a core principle. By embedding human oversight, enterprises ensure their AI agents remain transparent, reliable, and safe.

At Creatio, we’ve experienced firsthand that when humans and AI work side by side, organizations can streamline processes and achieve smarter and safer outcomes that continuously improve over time.